Photo by Aron Visuals on Unsplash

Improving DynamoDB's TTL accuracy to minutes (or better)

When "usually within two days" isn't good enough for you!

Everybody knows how annoying it is when things drag on way too long! Whether it's a refinement meeting that never seems to end, a story from a coworker that just won't get to the point, or an item in your DynamoDB table you specifically told to be gone at a certain moment but just won't leave your database! According to the AWS documentation, you can set a time-to-live (TTL) on dynamoDB records, which AWS will then remove after that time. However, as it also states in the docs:

Because TTL is meant to be a background process, the nature of the capacity used to expire and delete items via TTL is variable (but free of charge). TTL typically deletes expired items within 48 hours of expiration.

And even though in practice items are often deleted within 15 minutes, no guarantee can be given that it actually will, and that can be a problem for you!

In this blog we will discuss a scalable setup in which you can use to expire items at an accuracy defined by yourself.

The architecture

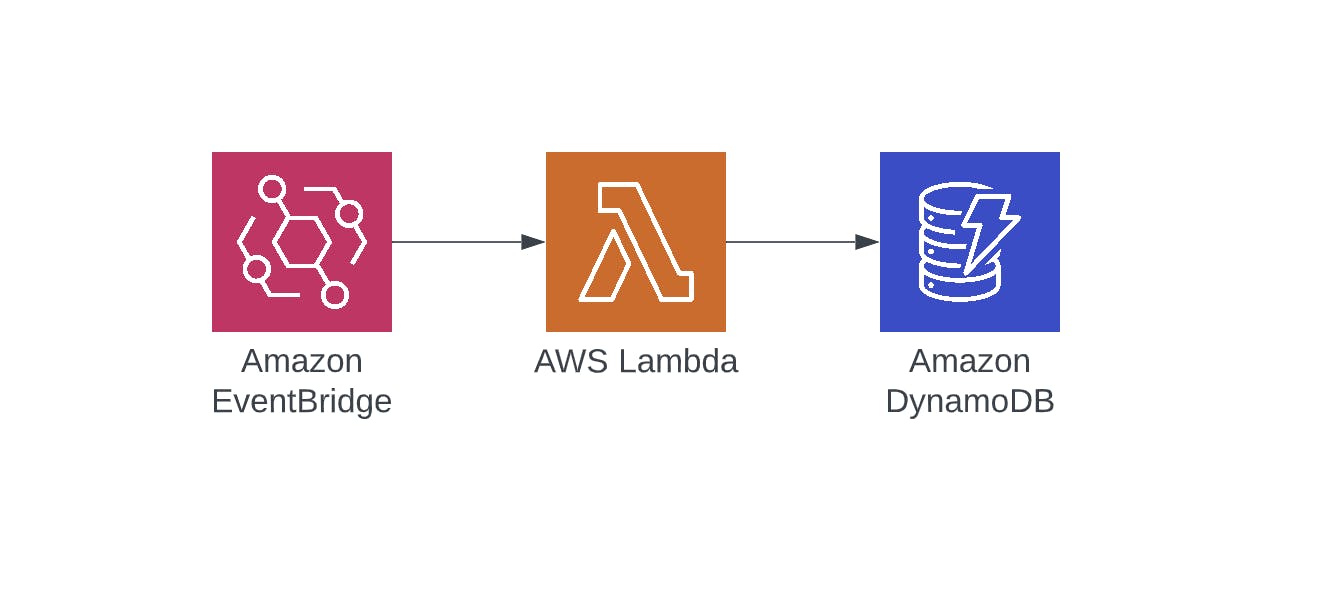

To get rid of your items faster, we can use the architecture below. A scheduled EventBridge rule is used to trigger a lambda every minute. The lambda queries DynamoDB which items are expired, and deletes them.

In the sections below, we'll provide the code to create this infrastructure and the lambda code to query and delete the items.

The data model

Besides using the TTL field in DynamoDB, we have to create another field which contains not an expiration TIME but an expiration WINDOW with a granularity you can define yourself. The expirationWindow defines in what time window the event should be expired. You are free to choose this window, but the only requirement is that it has to be a multiple of the frequency with which you trigger the lambda. So if you trigger the lambda every 5 minutes, the time window should be either 5, 10, 15, etc... minutes long (more on that below).

To gain one-minute accuracy, we also use one-minute expirationWindow granularity. We will query this window later on to fetch all items that should be expired.

The expirationWindow can be constructed however you like, but I chose to go for a format of the start and end datetime, separated by an underscore: StartISOTimestamp_EndISOTimestamp. For example if we choose a one-minute granularity window it could like like 2022-07-19T21:27:00.000Z_2022-07-19T21:28:00.000Z.

An example item in the table now looks like:`

| itemId (partition key) | ttl | expirationWindow | other attributes |

| 0001 | 1658266025 | 2022-07-19T21:27:00.000Z_2022-07-19T21:28:00.000Z | ... |

To be able to query this window, we have to create a so-called global secondary index on the table. It's basically a copy of your table, but with a different partition key and optional sort key to support the queries we need.

So the index expirationWindowIndex has the following structure:

| expirationWindow (partition key) | ttl (sort key) | itemId | other attributes |

| 2022-07-19T21:27:00.000Z_2022-07-19T21:28:00.000Z | 1658266025 | 0001 | ... |

This index allows us to query all items within a certain expirationWindow (because it's the partition key), and even filter on the ttl attribute (because it's the sort key).

NOTE: In this blog we use CloudWatch events to trigger our lambda function, which has a minimum granularity of 1 minute, so we'll keep our expiration window also with a granularity of one minute.

The infrastructure

To deploy the infrastructure to support this setup, you can create a CDK stack like below. It creates a table (with ttl enabled and a global secondary index on the expirationWindow), an event rule and a lambda.

import * as cdk from 'aws-cdk-lib';

import * as dynamodb from 'aws-cdk-lib/aws-dynamodb';

import * as events from 'aws-cdk-lib/aws-events';

import * as targets from 'aws-cdk-lib/aws-events-targets';

import * as iam from 'aws-cdk-lib/aws-iam';

import * as lambdaJs from 'aws-cdk-lib/aws-lambda-nodejs';

import { Construct } from 'constructs';

export class DeleteItemsStack extends cdk.Stack {

public table: dynamodb.Table;

constructor(scope: Construct, id: string, props: cdk.StackProps) {

super(scope, id, props);

/**

* The table itself

*/

this.table = new dynamodb.Table(this, 'expirationTable', {

partitionKey: { name: 'itemId', type: dynamodb.AttributeType.STRING },

tableName: 'expirationTable',

billingMode: dynamodb.BillingMode.PAY_PER_REQUEST,

timeToLiveAttribute: 'ttl',

});

/**

* Deleting expired items: query and delete expired items every minute

*/

const indexName = 'expirationWindowIndex';

this.table.addGlobalSecondaryIndex({

indexName: indexName,

partitionKey: { name: 'expirationWindow', type: dynamodb.AttributeType.STRING },

sortKey: { name: 'ttl', type: dynamodb.AttributeType.NUMBER },

});

const deleteExpiredItemsFunction = new lambdaJs.NodejsFunction(this, 'deleteExpiredItemsFunction', {

entry: 'PATH_TO_LAMBDA_HERE',

timeout: cdk.Duration.minutes(5), // To support a load of api calls if needed :P. And it's still faster than dynanomdb TTL.

memorySize: 2048,

environment: {

TABLE_NAME: this.table.tableName,

TABLE_INDEX_NAME: indexName,

},

});

// Create an eventrule that goes off every minute, and transform the payload

const eventRule = new events.Rule(this, 'scheduleRule', {

schedule: events.Schedule.cron({ minute: '*/1' }),

});

eventRule.addTarget(new targets.LambdaFunction(deleteExpiredItemsFunction, {

event: events.RuleTargetInput.fromObject({

time: events.EventField.fromPath('$.time'),

}),

}));

//allow it to query and delete items

deleteExpiredItemsFunction.addToRolePolicy(

new iam.PolicyStatement({

actions: ['dynamodb:Query', 'dynamodb:BatchWriteItem'],

resources: [

this.table.tableArn,

`${this.table.tableArn}/index/${indexName}`,

],

effect: iam.Effect.ALLOW,

}),

);

}

}

For the event rule, note that a custom mapping is used, so that the event payload invoking the lambda contains only one key with the exact 0-second timestamp of the minute the event was supposed to be created. For example:

{

time: "2022-07-19T20:55:00.000Z"

}

With this code, we now have all the infra we need to delete our items!

The lambda

The functionality used to query and delete items consists of 3 steps:

- Process the EventBridge payload to check what

expirationWindowshould be queried - Query that

expirationWindowto get all items that should be deleted - Perform a

BatchWriteItemcommand to delete the items.- Split the list of items in chunks of 25 items

- Delete the chunk

- Retry in case any items fail processing up to 3 times

- Repeat until all items and chunks are processed

The code for this can be found below:

/**

* This lambda polls a table filled with items and checks if any of the items are expired.

* If so, it deletes those entries from the table.

*/

import * as dynamo from '@aws-sdk/client-dynamodb';

import * as libDynamo from '@aws-sdk/lib-dynamodb';

import { Context } from 'aws-lambda';

const dynamoClient = new dynamo.DynamoDBClient({ region: 'eu-west-1' }); // You can change this to any region of course

const docClient = libDynamo.DynamoDBDocumentClient.from(dynamoClient);

interface ScheduledEvent {

time: string;

}

/**

* Construct a start- and end ISO timestamp, representing the partition key of the table's Global Secondary Index (GSI)

* @param date The date of the event to construct the expirationWindow for

* @returns e.g. "2022-06-23T15:50:00.000Z_2022-06-23T15:51:00.000Z"

*/

export const getExpirationWindow = (date: Date, granularityInSeconds = 60) => {

// a coefficient used to round to the nearest 5 minutes (the number of milliseconds in 5 minutes)

const numberOfMsInWindow = 1000 * granularityInSeconds;

// Subtract a second to make sure that floor and ceil give different results for a time falling exactly on a window's edge (12:00:00)

const expirationDateMinus1 = new Date(date.getTime());

expirationDateMinus1.setSeconds((expirationDateMinus1.getSeconds() - 1));

const endWindow = new Date(Math.ceil(date.getTime() / numberOfMsInWindow) * numberOfMsInWindow).toISOString();

const startWindow = new Date(Math.floor(expirationDateMinus1.getTime() / numberOfMsInWindow) * numberOfMsInWindow).toISOString();

return startWindow + '_' + endWindow;

};

/**

* Queries 1 partition of the Global Secondary Index (GSI) of the table,

* and filters on items that have been expired by using the sort key

* @param expirationWindow Primary key of the GSI of the table, containing all items expiring in this N minute window

* @param expirationTime Epoch timestamp on when the items expires

* @returns A list of item IDs to delete

*/

const queryExpiredItems = async (

expirationWindow: string,

expirationTime: number,

tableName: string,

tableIndex: string

) => {

const command = new libDynamo.QueryCommand({

IndexName: tableIndex,

TableName: tableName,

KeyConditionExpression: '#expirationWindow = :expirationWindow AND #expirationTime < :expirationTime',

ExpressionAttributeNames: {

'#expirationWindow': 'expirationWindow',

'#expirationTime': 'ttl',

},

ExpressionAttributeValues: {

':expirationWindow': expirationWindow,

':expirationTime': expirationTime,

},

});

const response = await docClient.send(command);

return response?.Items?.map(item => (item.itemId));

};

/**

* Create the parameters for the BatchWrite request to delete a batch of items

* @param itemIds List of item id's to be deleted

*/

const createDeleteRequestParams = (itemIds: string[], tableName: string) => {

const deleteRequests: { [key: string]: any }[] = itemIds.map((itemId) => (

{

DeleteRequest: {

Key: {

itemId,

},

},

}));

const paramsDelete: libDynamo.BatchWriteCommandInput = {

RequestItems: {},

};

paramsDelete.RequestItems![tableName] = deleteRequests;

return paramsDelete;

};

/**

* Deletes a batch (max 25) of items from the table

* @param itemIds List of item Ids to delete from the table

* @returns unprocessed items and whether or not errors have occurrred.

*/

const deleteBatch = async (itemIds: string[], tableName: string) => {

if (itemIds.length > 25) {

throw new Error(`BatchWrite item supports only up to 25 items. Got ${itemIds.length}`);

}

let unprocessedItems: string[] = [];

const requestParams = createDeleteRequestParams(itemIds, tableName);

try {

const data = await docClient.send(new libDynamo.BatchWriteCommand(requestParams));

// Check if any unprocessed data object is present, and it is populated with at least one item for this table

if (data.UnprocessedItems && data.UnprocessedItems[tableName]) {

unprocessedItems = data.UnprocessedItems[tableName].map(

(request) => (request.DeleteRequest?.Key!).itemId,

);

}

// At least a partial success, maybe with unprocessed items

return {

unprocessed: unprocessedItems,

hasErrors: false,

};

} catch (err) {

// Error in deleting items. Retrying...=

return {

unprocessed: itemIds,

hasErrors: true,

};

}

};

/**

* Delete a set of items from the table.

* @param items List of itemIds of expired items

* @param retriesLeft when errors occur, unprocessed items are retried N times.

*/

const deleteExpiredItems = async (items: string[], tableName: string, retriesLeft = 3) => {

let unprocessedItems: string[] = [];

if (retriesLeft <= 0) {

throw new Error(`unable to delete the desired ${items.length} items`);

}

// Delete in chunks of 25 items

const chunkSize = 25;

for (let i = 0; i < items.length; i += chunkSize) {

const batchOfItems = items.slice(i, i + chunkSize);

const response = await deleteBatch(batchOfItems, tableName);

unprocessedItems = unprocessedItems.concat(response.unprocessed);

}

// Retry one more time if unprocessed items happen

if (unprocessedItems.length > 0) {

await deleteExpiredItems(unprocessedItems, tableName, retriesLeft - 1);

}

};

/**

*

* @param event A scheduled event from EventBridge with custom payload, providing the exact time of the cron-event.

* @param _context lambda context object

* @returns 'done'

*/

// eslint-disable-next-line @typescript-eslint/no-unused-vars

export const lambdaHandler = async (event: ScheduledEvent, _context: Context) => {

if (!process.env.TABLE_NAME || !process.env.TABLE_INDEX_NAME) {

throw new Error('TABLE_NAME and TABLE_INDEX_NAME should be defined but are not');

}

const tableName = process.env.TABLE_NAME!;

const tableIndex = process.env.TABLE_INDEX_NAME!;

// Step 1: read the incoming event payload from eventbridge and process to

// an expirationWindow and timestamp to query the datatore.

const eventDatetime = new Date(event.time);

const expirationWindow = getExpirationWindow(eventDatetime);

const expirationTimestamp = Math.round(eventDatetime.getTime() / 1000);

// Step 2: Query the expired items

const items = await queryExpiredItems(expirationWindow, expirationTimestamp, tableName, tableIndex);

if (items) {

// Step 3: Delete the expired items

await deleteExpiredItems(items, tableName);

}

};

Choosing the expiration window

With the setup above, the expiration window needs to be a multiple of the frequency the lambda is triggered. To see why, let's consider the following example:

A trigger happens every two minutes, and the window is 5 minutes (not a multiple). This means that at the 4th minute the items are queried from the 0-5 minute index, deleting only minute 0-4. At the next trigger on the 6th minute, the events from the 5-10 minute index are queried, deleting only minute 5-6. Events between minute 4 and 5 will be left unprocessed!

Fortunately the native TTL feature will kick in at some point, so the items will be deleted in the end. You can add logic yourself to the lambda to also query previous indexes, but I chose not to do this to keep things simpler.

Cost implications of this setup

Adding global secondary indexes to your table can significantly increase cost for write heavy databases, since you not only pay for the record written to the table, but also for any indexes affected by your write. This effectively doubles your write cost (assuming you have no other GSI's configured).

Also, TTL is free while actively deleting items also counts as a write, causing additional costs.

Further improving accuracy

In the described setup, scheduled events are used to trigger delete functionality. Since these only have minute granularity, we can also only query every minute. To improve on this write your own scheduling logic to support for example second-level granularity.

Conclusion

Using the provided setup, you can query and delete items with more accuracy than DynamoDb's native TTL feature. TTL can still be used, but additionally we can actively query and delete items that simply take too long to be expired.